A scalable ML-powered service that automates pet portrait creation for printing factories, transforming customer photos into unique artwork while maintaining quality and handling high-volume demand.

A printing factory wanted to offer a scalable personalization service where customers could upload photos of their pets, and designers would create unique artwork based on those images. The existing process was slow and labor-intensive, requiring manual editing for every order. The goal was clear: automate and scale the design pipeline so a single designer could handle up to 10x more orders without compromising quality.

Building a scalable personalization pipeline involved multiple layers of complexity:

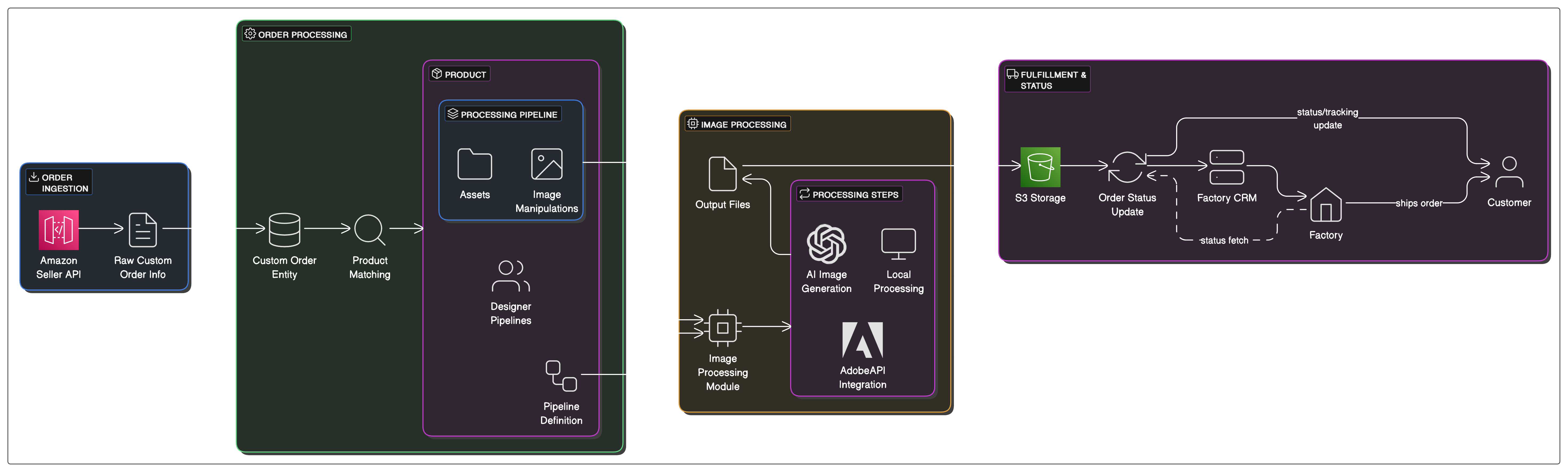

We delivered a distributed ML-powered service that automated image processing, design generation, and delivery into the factory's workflow.

Orders and customer images automatically ingested via Amazon Seller API. Parsed into structured entities with validation flows for image quality and metadata.

Stable Diffusion-based models fine-tuned for pet artwork generation. Multi-step pipeline combining inpainting, style transfer, and subject-consistency modules. Automated post-processing with Pillow and Adobe API integration.

Local models deployed in AWS ECS clusters with GPU-backed scaling. Traffic spikes offloaded to external API calls triggered by AWS Lambda + SQS. Outputs stored in AWS S3 and synced with factory CRM for order tracking.

Designers reviewed AI-generated drafts through a custom CMS dashboard. System allowed light manual corrections while reducing initial editing workload by 80–90%.

This project demonstrates end-to-end ML engineering: from model fine-tuning to production deployment, handling real-world scalability challenges while maintaining quality standards.